How the web gives us what we want to see, and that’s not necessarily a good thing.

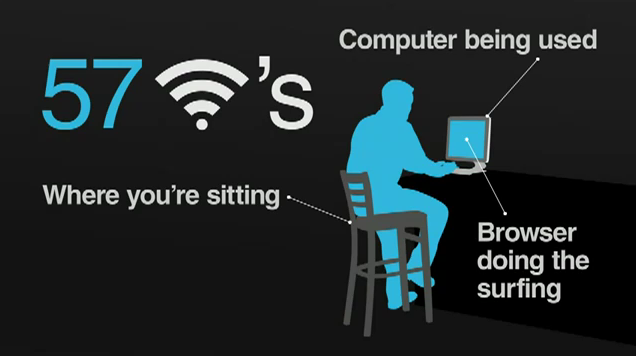

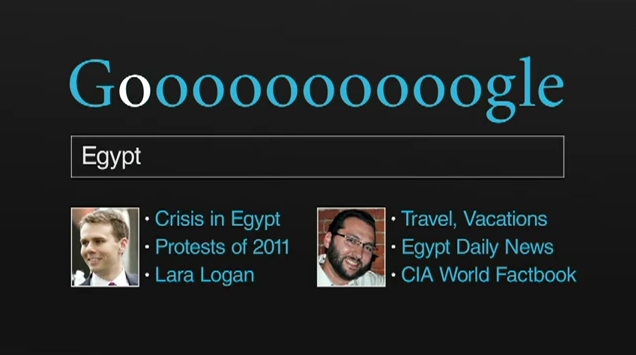

Most of us are aware that our web experience is somewhat customized by our browsing history, social graph and other factors. But this sort of information-tailoring takes place on a much more sophisticated, deeper and far-reaching level than we dare suspect. (Did you know that Google takes into account 57 individual data points before serving you the results you searched for?)

Most of us are aware that our web experience is somewhat customized by our browsing history, social graph and other factors. But this sort of information-tailoring takes place on a much more sophisticated, deeper and far-reaching level than we dare suspect. (Did you know that Google takes into account 57 individual data points before serving you the results you searched for?)That’s exactly what Eli Pariser, founder of public policy advocacy group MoveOn.org, explores in his fascinating and, depending on where you fall on the privacy spectrum, potentially unsettling new book, The Filter Bubble — a compelling deep-dive into the invisible algorithmic editing on the web, a world where we’re being shown more of what algorithms think we want to see and less of what we should see.

I met Eli in March at TED, where he introduced the concepts from the book in one of this year’s best TED talks. Today, I sit down with him to chat about what exactly “the filter bubble” is, how much we should worry about Google, and what our responsibility is as content consumers and curators — exclusive Q&A follows his excellent TED talk:

"The primary purpose of an editor [is] to extend the horizon of what people are interested in and what people know. Giving people what they think they want is easy, but it’s also not very satisfying: the same stuff, over and over again. Great editors are like great matchmakers: they introduce people to whole new ways of thinking, and they fall in love.” ~ Eli Pariser

What, exactly, is “the filter bubble”?

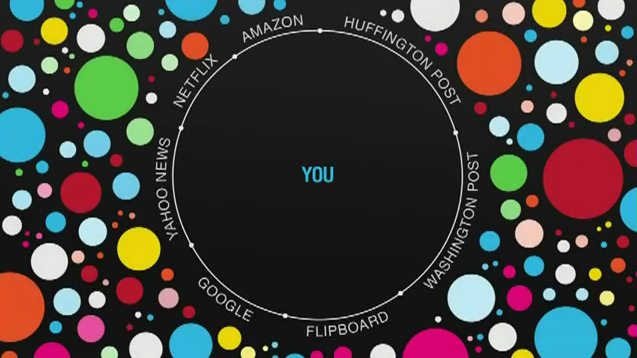

EP: Your filter bubble is the personal universe of information that you live in online — unique and constructed just for you by the array of personalized filters that now power the web. Facebook contributes things to read and friends’ status updates, Google personally tailors your search queries, and Yahoo News and Google News tailor your news. It’s a comfortable place, the filter bubble — by definition, it’s populated by the things that most compel you to click. But it’s also a real problem: the set of things we’re likely to click on (sex, gossip, things that are highly personally relevant) isn’t the same as the set of things we need to know.

EP: Your filter bubble is the personal universe of information that you live in online — unique and constructed just for you by the array of personalized filters that now power the web. Facebook contributes things to read and friends’ status updates, Google personally tailors your search queries, and Yahoo News and Google News tailor your news. It’s a comfortable place, the filter bubble — by definition, it’s populated by the things that most compel you to click. But it’s also a real problem: the set of things we’re likely to click on (sex, gossip, things that are highly personally relevant) isn’t the same as the set of things we need to know.

How did you first get the idea of investigating this?

In an age of information overload, algorithms certainly finding the most relevant information about what we’re already interested in more efficiently. But it’s human curators who point us to the kinds of things we didn’t know we were interested in until, well, until we are. How does the human element fit into the filter bubble and what do you see as the future of striking this balance between algorithmic efficiency and curatorial serendipity?

One interesting place this comes up is at Netflix — the basic math behind the Netflix code tends to be conservative. Netflix uses an algorithm called Root Mean Squared Error (RMSE, to geeks), which basically calculates the “distance” between different movies. The problem with RMSE is that while it’s very good at predicting what movies you’ll like — generally it’s under one star off — it’s conservative. It would rather be right and show you a movie that you’ll rate a four, than show you a movie that has a 50% chance of being a five and a 50% chance of being a one. Human curators are often more likely to take these kinds of risks.

How much does Google really know about us, in practical terms, and — more importantly — how much should we care?

Most of the time, this doesn’t have much practical consequence. But one of the problems with this kind of massive consolidation is that what Google knows, any government that is friends with Google can know, too.

"Companies like Yahoo have turned over massive amounts of data to the US government without so much as a subpoena.” ~ Eli Pariser

I’d also argue there’s a basic problem with a system in which Google makes billions off of the data we give it without giving us much control over how it’s used or even what it is.

Do you think that we, as editors and curators, have a certain civic responsibility to expose audiences to viewpoints and information outside their comfort zones in an effort to counteract this algorithmically-driven confirmation bias, or are people better left unburdened by conflicting data points?

Is it possible to reconcile personalization and privacy? What are some things we could do in our digital lives to strike an optimal balance?

On an individual level, I think it comes down to varying your information pathways. There was a great This American Life episode which included an interview with the guy who looks at new mousetrap designs at the biggest mousetrap supply company. As it turns out, there’s not much need for a better mousetrap, because the standard trap does incredibly well, killing mice 90% of the time.

The reason is simple: Mice always run the same route, often several times a day. Put a trap along that route, and it’s very likely that the mouse will find it and become ensnared.

So, the moral here is: don’t be a mouse. Vary your online routine, rather than returning to the same sites every day. It’s not just that experiencing different perspectives and ideas and views is better for you — serendipity can be a shortcut to joy.________________________________________________________________

Ed. note: The Filter Bubble is out today and one of the timeliest, most thought-provoking books I’ve read in a long time — required reading as we embrace our role as informed and empowered civic agents in the world of web citizenship.

No comments:

Post a Comment